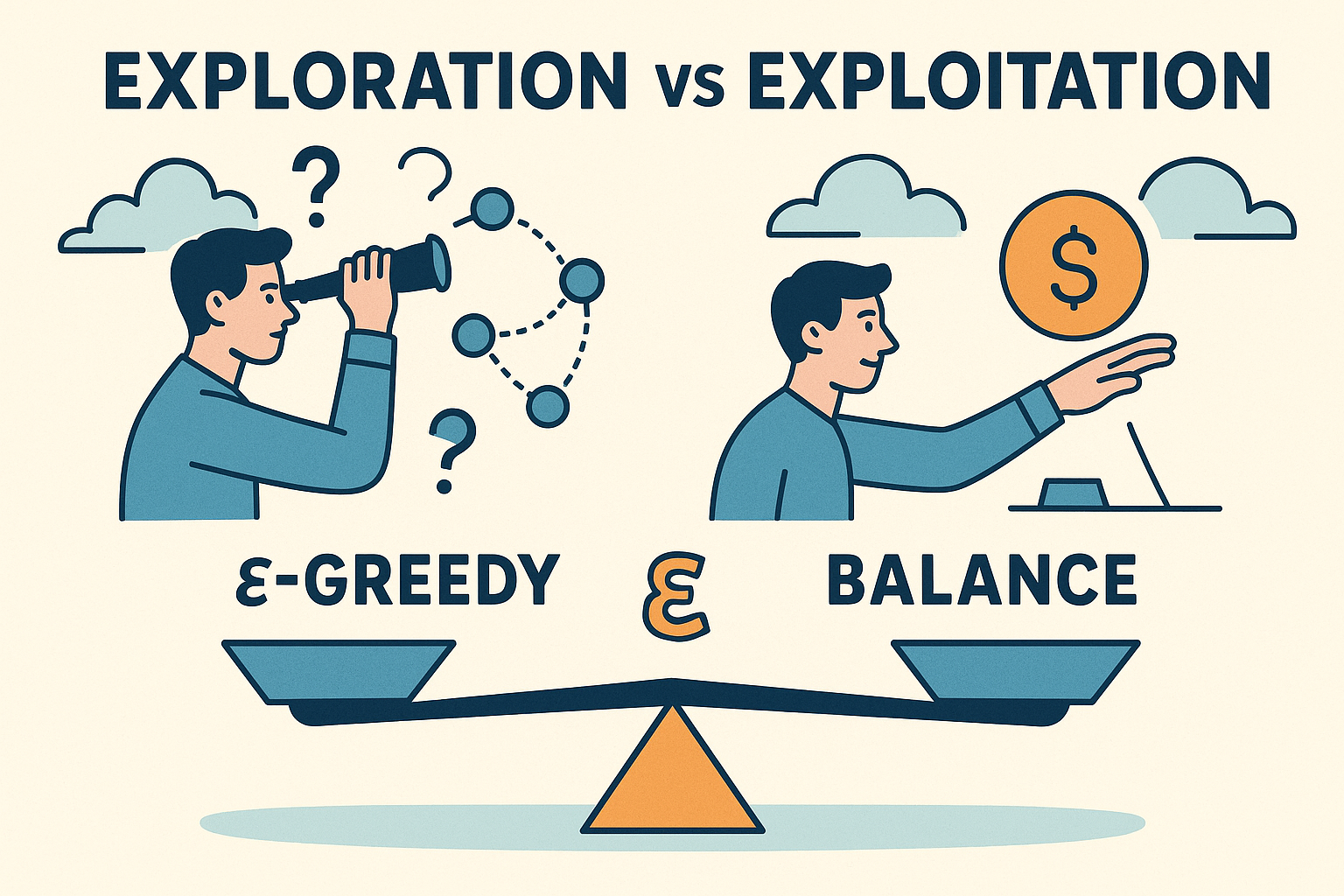

The exploration-exploitation dilemma: do you try new things (might be better) or stick with what works (guaranteed decent)?

Too little exploration: miss optimal actions Too much exploration: waste time on bad actions

Why exploration matters

Imagine a game where:

- Action A gives +1 reward always

- Action B gives +0 usually, but +100 rarely

Greedy agent tries B once, gets 0, never tries again. Misses the jackpot.

Interactive demo: Exploration Animation

Epsilon-greedy

Simplest approach:

- Probability ε: random action

- Probability 1-ε: best known action

def epsilon_greedy(Q, state, epsilon):

if np.random.random() < epsilon:

return np.random.randint(len(Q[state]))

return np.argmax(Q[state])

Often decay ε over time:

epsilon = max(0.01, epsilon * 0.995) # decay each episode

Simple, works okay. But explores blindly - random actions might not be informative.

Boltzmann (softmax) exploration

Action probability proportional to Q-value:

$$P(a|s) = \frac{\exp(Q(s,a)/\tau)}{\sum_{a’}\exp(Q(s,a’)/\tau)}$$

τ (temperature) controls exploration:

- High τ: uniform distribution (explore)

- Low τ: concentrate on best (exploit)

- τ → 0: greedy

def boltzmann(Q, state, tau):

q_values = Q[state]

exp_q = np.exp(q_values / tau)

probs = exp_q / exp_q.sum()

return np.random.choice(len(q_values), p=probs)

Upper Confidence Bound (UCB)

Optimism in the face of uncertainty.

$$A_t = \arg\max_a \left[ Q_t(a) + c\sqrt{\frac{\ln t}{N_t(a)}} \right]$$

Bonus for uncertainty (less-tried actions). Explores systematically.

def ucb(Q, counts, t, c=2):

ucb_values = Q + c * np.sqrt(np.log(t) / (counts + 1e-5))

return np.argmax(ucb_values)

Provably optimal for multi-armed bandits.

Thompson Sampling

Bayesian approach:

- Maintain belief distribution over Q-values

- Sample from belief

- Act greedily on sample

# For Bernoulli bandits

def thompson_sampling(successes, failures):

samples = [np.random.beta(s + 1, f + 1)

for s, f in zip(successes, failures)]

return np.argmax(samples)

Natural exploration: uncertain actions get sampled more.

Curiosity-driven exploration

For complex environments, add intrinsic reward for novelty.

Prediction error: Reward for visiting states the model predicts poorly.

$$r_i = |f(\phi(s), a) - \phi(s’)|^2$$

Where f is forward model, φ is feature extractor.

Count-based: Bonus inversely proportional to visit count.

$$r_i = \frac{\beta}{\sqrt{N(s)}}$$

Random Network Distillation (RND)

State-of-the-art curiosity:

- Fixed random network produces features

- Learned network predicts those features

- Prediction error = novelty

Novel states are hard to predict.

# Simplified

random_net = RandomNetwork() # fixed

predictor = Predictor() # trained

intrinsic_reward = ((random_net(state) - predictor(state)) ** 2).mean()

Comparison

| Method | Pros | Cons |

|---|---|---|

| ε-greedy | Simple | Blind exploration |

| Boltzmann | Smooth | Temperature tuning |

| UCB | Principled | Assumes stationarity |

| Thompson | Bayesian optimal | Needs prior |

| Curiosity | Sparse rewards | Can get distracted |

Exploration strategies unlocked! Star ML Animations and share this RL guide with others!